What is MCP

Model Context Protocol (MCP) is a new open standard that makes it easier for AI systems to connect with external data and services.

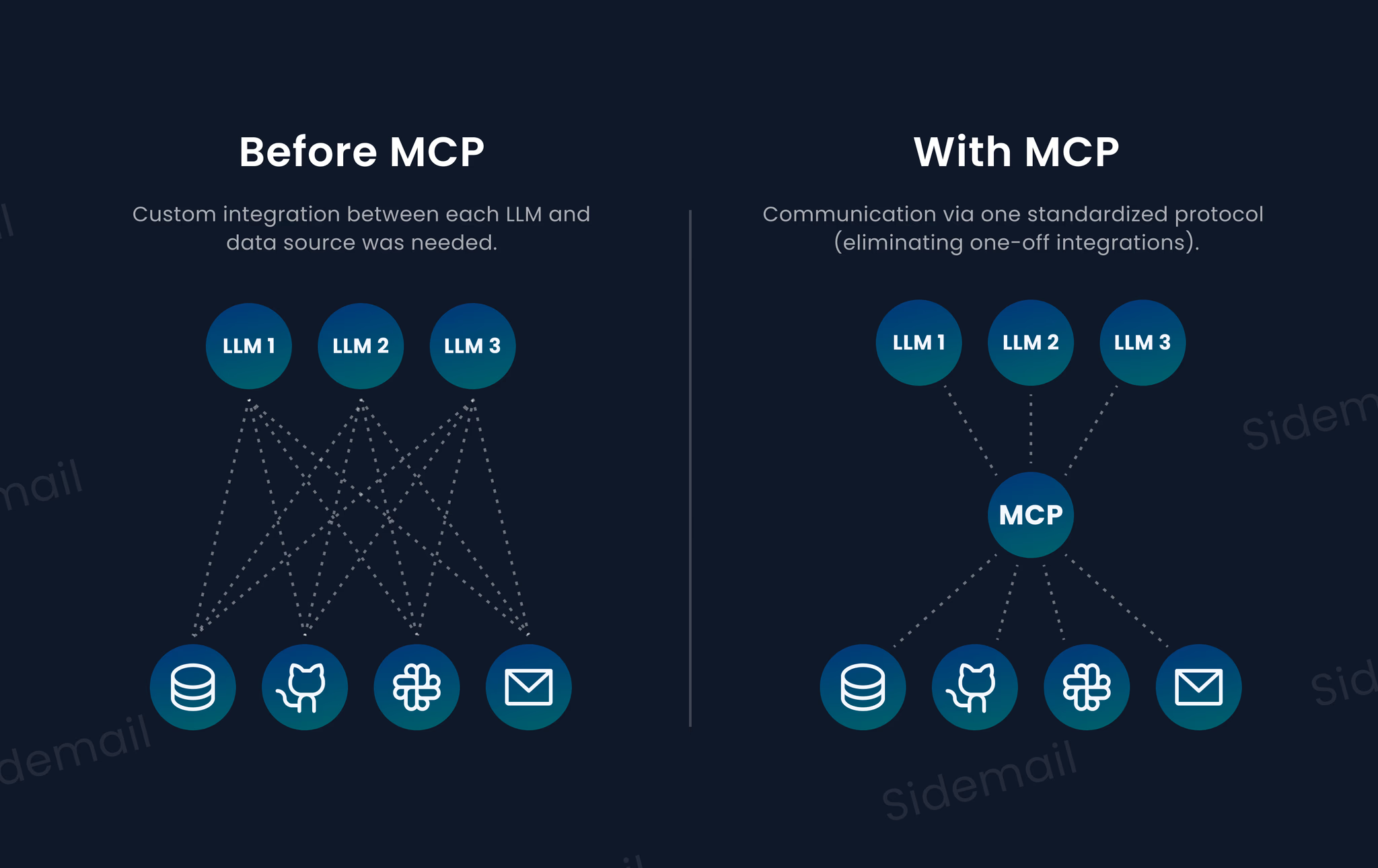

In simple terms, MCP acts a bit like an API for AI models – it provides a standard “language” for AI programs to access tools or data from the outside world. This means developers no longer have to write one-off integrations for each service; instead, an AI can use MCP to securely interface with many different data sources through a unified protocol.

Think of MCP like a USB-C port for AI applications: it’s a single, standardized way to plug an AI model into various databases, apps, or APIs.

MCP was developed by Anthropic (the AI company behind Claude) and open-sourced in late 2024. It quickly gained traction as a standard for connecting large language models (LLMs) to the systems where real data lives. In other words, MCP now lets AI “go beyond” its training data by pulling in live information and taking actions through external tools.

Real-world MCP examples

Example 1 – Café reservation

To illustrate, imagine you have an AI assistant that needs to make a reservation for a meeting at a café.

Normally, the AI might not know anything beyond its static training data. But if we give it a way to access an external system (such as a phone or booking API), it can get the job done. MCP is like giving the AI a phone number to call: it can reach out to a service, ask for what it needs, and act on the response.

In practice, this means an AI agent (a program built on an LLM) could use MCP to check your calendar, find an open slot, call the café’s booking system, and reserve a table, all autonomously. This ability to retrieve up-to-date information and interact with external apps is a game-changer for making AI more agentic.

Example 2 – E-commerce customer support

Another example would be a direct-to-consumer e-commerce brand overwhelmed by the steady trickle of “Where’s my order?” messages.

A human agent normally has to juggle three dashboards – Shopify for the order record, ShipStation for live tracking, and Stripe for any refund.

With MCP in the loop, the customer-support AI inside the chat or email thread handles everything in one smooth sweep: it takes the buyer’s order number, queries Shopify through an MCP endpoint to fetch the purchase details, requests real-time tracking data from ShipStation, and if the package shows a failed delivery, triggers a refund via Stripe and sends an apology email that includes a goodwill discount code.

Every interaction is automatically audit-logged at the protocol layer, giving finance a clean trail for any chargeback review while cutting response time from minutes to seconds.

How MCP works

Under the hood, MCP follows a client-server architecture. Here’s what that means:

-

MCP client

- Every AI app (a chatbot, an AI-powered IDE, etc.) contains a small MCP client.

- Think of it as a built-in plug that lets the AI talk to outside tools.

-

MCP server

- Each outside service (for example, your database, Gmail, Google Drive, and so on) runs its own tiny MCP server.

- The server is just a translator that turns the service’s features into something the AI can understand.

When the AI needs a tool, its client opens a direct line to that tool’s server. The same AI agent can keep several of these lines open in parallel: one to Google Drive, another to Gmail, another to your database, all managed quietly in the background by the host app.

MCP message types

All communication between the AI client and the servers happens via structured messages, usually in JSON format (following a pattern similar to JSON-RPC). MCP defines a few basic message types:

- Requests: Ask the other side to do something or provide data (and expect a response).

- Results: Successful responses to a request, containing the data or confirmation.

- Errors: Indicate a request failed or couldn’t be processed.

- Notifications: One-way messages that don’t require any reply (e.g. a status update or event notice).

MCP processes

A typical MCP interaction begins with a handshake (initialization): the client connects and both sides agree on the protocol version and capabilities.

After that, the client can send requests (like “retrieve file X” or “execute function Y”) and the server will return results. This request-response exchange can continue as needed, and either side can also send notifications (for example, a server could send a “data updated” notification).

When done, either party can terminate the connection gracefully. Because MCP is a protocol (like an agreed set of rules), any client and server following the spec can work together, regardless of what language or platform they’re built on.

Types of MCP connections

MCP is flexible in terms of where these components run. The connections can be local or remote. An MCP server might run as a local process on the same machine (communicating via standard I/O streams), or it could be a remote web service (communicating over HTTP). This flexibility means MCP can operate within a closed enterprise network or across the internet.

MCP essentially standardizes how an AI agent “talks” to tools. The AI doesn’t call an external API directly. Instead, it issues a structured MCP request to a server, and the server handles the actual API calls or data queries, then returns a result to the AI. This indirection provides a clear separation and security layer – the AI only sees the data it requested, in a uniform format, and cannot do anything not exposed by the MCP server. Developers can thus control what actions the AI is allowed to take by defining the MCP servers and their available functions.

Summary

The Model Context Protocol represents a significant evolution in how we integrate AI into real-world applications. It started with a simple idea – give AI a standard way to access external data and tools – and that idea is unlocking more powerful and context-aware AI behavior across industries. MCP makes it feasible to connect an LLM to your business systems (databases, CRM, email, etc.) securely and seamlessly, so the AI can provide relevant, up-to-date answers and even take actions on behalf of users.

For software developers and SaaS product teams, MCP offers a path to add AI smart features to your product without reinventing the wheel for each integration.

In the near future, it's expected that MCP becomes as widespread for AI tools as REST APIs are for web services. For those building SaaS products, it’s worth exploring how MCP could connect your AI models to your context (your database, your users’ emails, or your internal APIs) to deliver smarter, more proactive functionality.

The result is AI systems that are context-aware, capable, and truly helpful in real-world tasks, from sending the right email at the right time to handling complex multi-system workflows – all through a few well-defined protocols.

👉 Try Sidemail today

Dealing with emails and choosing the right email service is not easy. We will help you to simplify it. Create your account now and start sending your emails in under 30 minutes.